Last Updated: 2024–10-16

Background

AI data engineers need easy and effective ways to handle document ingress & egress for popular repositories such as Amazon Web Service (AWS) S3 object storage service.

Scope of the tutorial

In this tutorial, you will learn the NiFi list/fetch pattern for data ingress/egress operations with S3 buckets.

Learning objectives

Once you've completed this tutorial, you will be able to:

- Create & configure an AWSCredentialsProviderControllerService.

- Leverage ListS3 & FetchS3Object processors to handle document ingress.

- Utilize the PutS3Object processor for document egress.

Prerequisites

- Access to a NiFi cluster, such as Datavolo Cloud. See Datavolo Cloud: Getting started.

- Basic familiarization of building dataflows with Datavolo. See Build a simple NiFi flow.

- Optionally, writable access to an AWS S3 bucket.

Review the use case

The title of this tutorial is the summary of the use cases. You will retrieve documents from an S3 bucket and then store them into a different S3 bucket. The files will be PDF versions of Apache NiFi documentation.

Initialize the canvas

Access a Runtime

Log into Datavolo Cloud and access (create if necessary) a Runtime. Alternatively, leverage a Datavolo Server Runtime that you have access to.

This tutorial will function correctly on any current Apache NiFi cluster as the components are all community provided.

Setup a process group

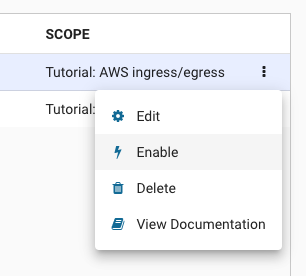

Create a Process Group (PG) named Tutorial: AWS ingress/egress and navigate into it.

Review controller services

The Apache NiFi Documentation states Controller Services "share functionality and state" for the benefit of processors. Here are two examples of these.

- AWSCredentialsProviderControllerService - a Controller Service that maintains common configuration information for a specific AWS account

- DBCPConnectionPool - a Controller Service that maintains a pool of database connections that can be utilized by processors

It is easy to see that common configuration properties as well as common runtime services are beneficial for maintainability as well as performance & scalability. These Controller Services also keep sensitive configuration information secured.

Further discussion on this component type is beyond the scope of this tutorial. Fortunately, the answer to the question of "when do I need to create a Controller Service" is easily answered. If you NEED one, you'll find out easily enough as it will expose itself as a required parameter setting in one of your processors.

For this lab, we will go ahead and create our needed Controller Services before we are needing to use them in the Processor properties.

Create controller services

You will need separate Controller Services to contain your AWS credentials. The first will be configured for anonymous access as Datavolo has provided a read-only S3 bucket for the data ingress. Data egress is optional and will require you to BYOB (Bring Your Own Bucket) and appropriate access credentials.

Setup anonymous credentials

Right click on the empty canvas inside the new PG and select Controller Services. Click the + icon on the far right to begin to create a new Controller Service.

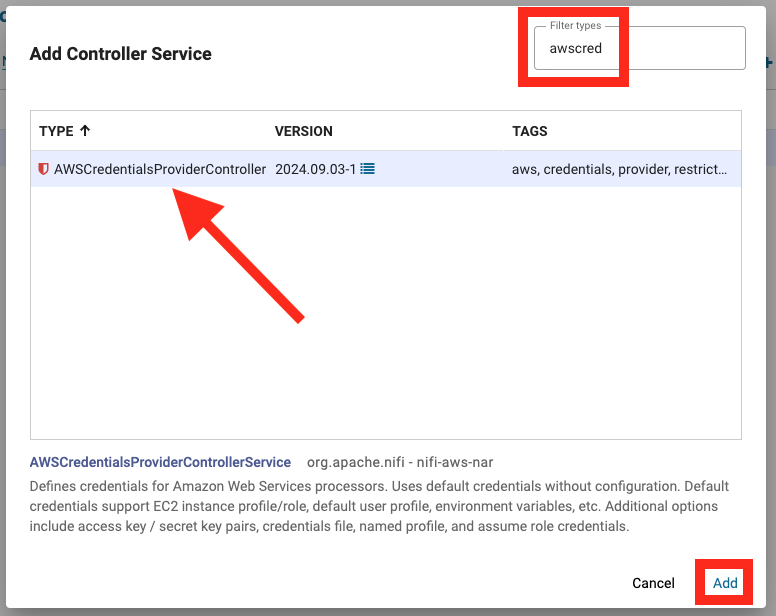

Type awscred in the Filter types search box and ensure AWSCredentialsProviderControllerService is selected before clicking on Add.

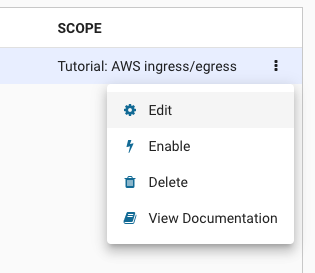

Click on Edit accessible from the vertical ellipsis on the far right of the new Controller Service list item.

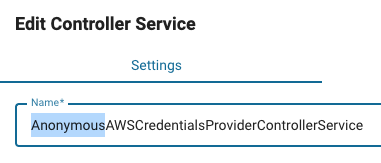

On the Settings tab, prefix the existing Name with Anonymous.

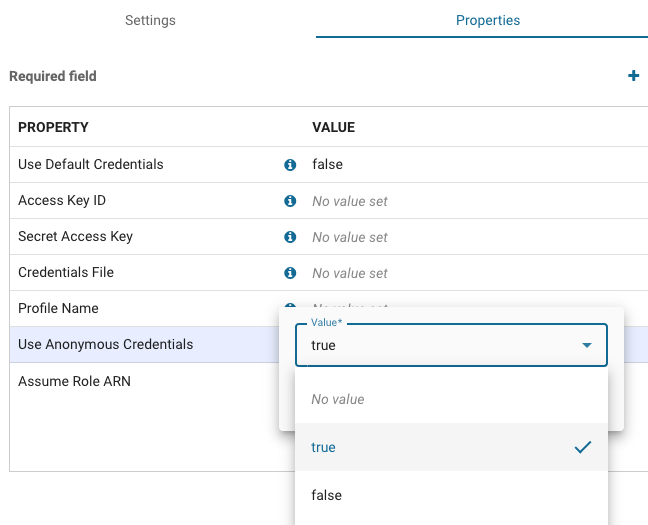

On the Properties tab, change Use Anonymous Credentials to true and click Apply to save the changes.

After saving the changes, right click on the vertical ellipsis and select Enable.

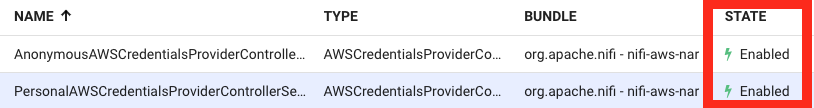

Click on Enable on the bottom right of Enable Controller Service and then Close when it appears. Verify your Controller Service shows a STATE of Enabled in the list.

Optionally, setup personal credentials

If you are going to write documents to S3, perform the remainder of the instructions in this step. As previously mentioned, you will need to Bring Your Own Bucket to this party to complete the document egress scenario.

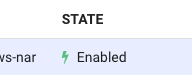

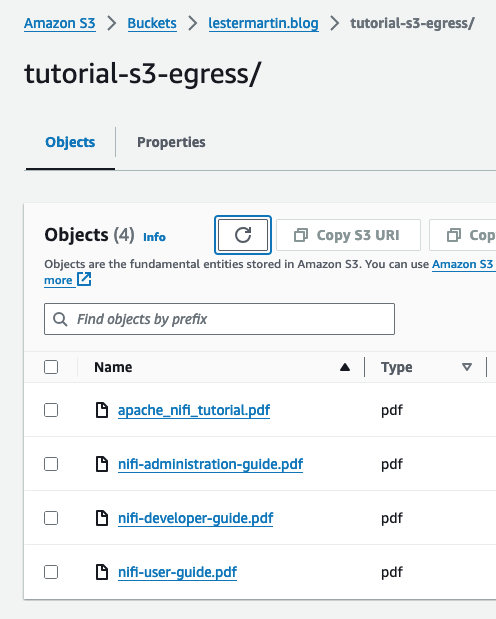

As an example, here is my lestermartin.blog S3 bucket located in the us-east-2 (Ohio) AWS region that I will be testing with. I have an empty "folder" named tutorial-s3-egress where files will be written to.

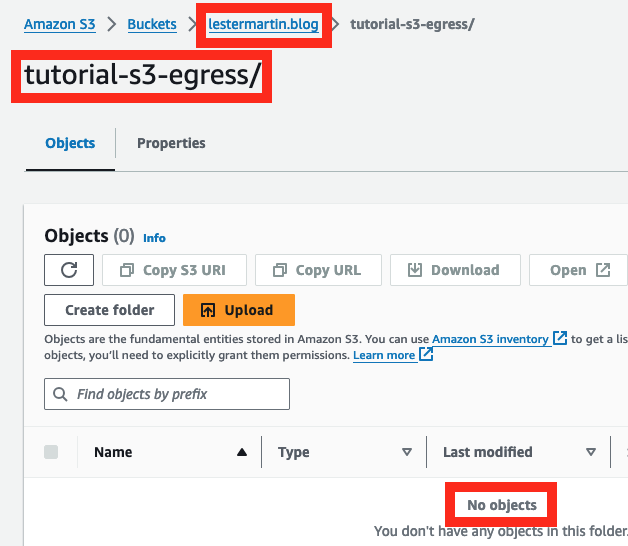

There are multiple ways to authenticate to AWS, but in my scenario I created Access Key ID and Secret Access Key values that have access to read/write my personal bucket. Again, these steps are optional.

Back in the Controller Services list, create another AWSCredentialsProviderControllerService instance and prefix the Name with Personal like before. In the Properties tab, you can add your personal Access Key ID and Secret Access Key values.

Apply the configuration changes and on the Controller Services list, Enable it as you did with the anonymous one you created earlier.

Click on Back to Process Group on the upper left to return to the canvas.

Leverage list/fetch pattern

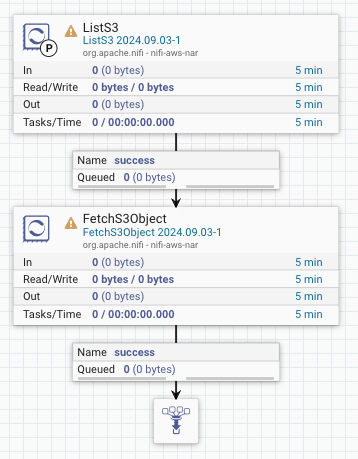

Add ListS3 & FetchS3Object processors and a Funnel to the canvas. Create connections for the success relationships so the flow looks similar to the following.

Configure/run list processor

In the Properties of ListS3, modify the following with the indicated values.

Bucket |

|

Region | US East (N. Virginia) |

AWS Credentials Provider Service | AnonymousAWSCredentialsProviderControllerService |

Prefix |

|

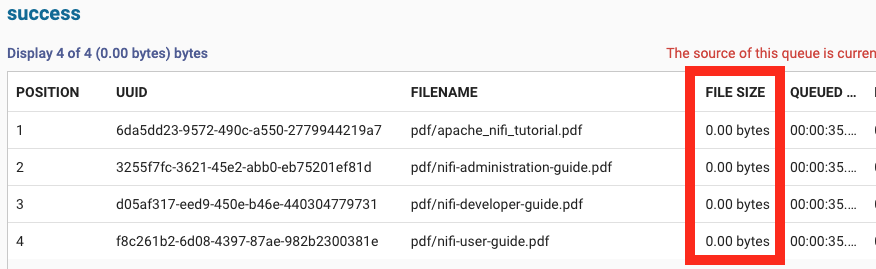

Start only ListS3 and verify the connection from it contains 4 FlowFiles. Each FlowFile suggests (from the FILENAME column) that they contain the actual content of the files in the list, but notice the FILE SIZE for all of them indicates otherwise.

Again, ListS3 only retrieves the details about files that need to be ingested.

Configure/run fetch processor

In the Relationships of FetchS3Object, auto-terminate the failure relationship. Configure these Properties as shown.

Region | US East (N. Virginia) |

AWS Credentials Provider Service | AnonymousAWSCredentialsProviderControllerService |

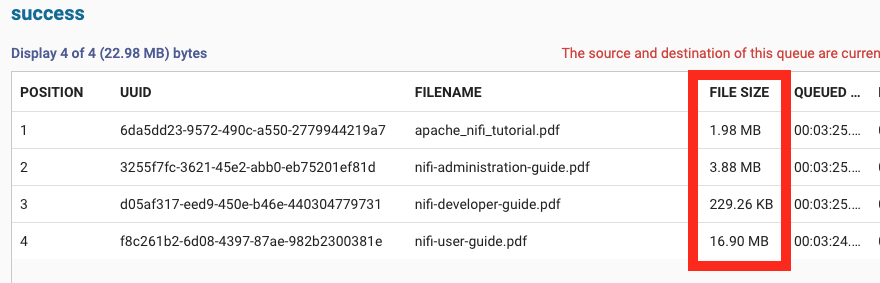

Start only FetchS3Object and verify the connection from it contains 4 FlowFiles. Each FlowFile still shows the same FILENAME, but the FILE SIZE is now reflecting the size of each of the PDFs linked to the FlowFiles.

You can Download content on one, or more of these, PDF files if desired.

Finish the flow

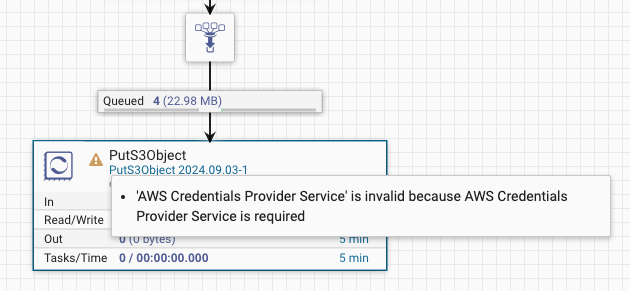

Add a PutS3Object processor on the canvas and create a connection to it from the existing Funnel. Auto-terminate both of the relationships it emits. Verify there is only a single configuration problem is being reported by the yellow triangle.

Configure/run put processor

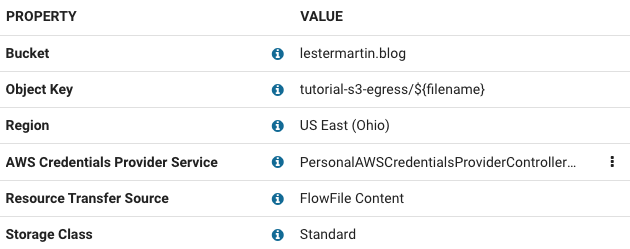

In the Properties of PutS3Object select PersonalAWSCredentialsProviderControllerService for AWS Credentials ProviderService. Enter your personal values for Bucket and Region that align with the BYOB theme of this tutorial.

You can prefix the ${filename} value of Object Key with any additional path that you choose. For the example shown at the beginning of this tutorial, I am entering tutorial-s3-egress/ so that it will include that in the full file name.

Your Properties will look similar, but not identical, to the following.

Run the Processor and verify that the 4 files are now stored in the appropriate S3 bucket you configured.

Congratulations, you've completed the NiFi doc ingress/egress with S3 tutorial!

What you learned

- How to create & configure an AWSCredentialsProviderControllerService.

- How to leverage ListS3 & FetchS3Object processors to handle document ingress.

- How to utilize the PutS3Object processor for document egress.

What's next?

Check out some of these codelabs...